Table of contents

Dynamic Programming is an algorithmic paradigm that is used to solve a specific class of problems. All the problems solvable by DP have 2 main properties: Optimal Substructure property and Overlapping subproblems .

Optimal substructure: The optimal solution of a problem can be achieved by using optimal solutions to the subproblems. In other words, if you can solve the subproblems correctly and optimally, you will be able to arrive at the optimal solution by combining the solutions of subproblems according to the required mathematical recurrence.

Overlapping subproblems: DP requires the same subproblems over and over again to arrive at the solution. So, if we are able to store the solutions to the subproblems, we can optimise our approach by avoiding solving the same problem repeatedly.

| # | Aspect | Memoization | Tabulation |

|---|---|---|---|

| 1 | Way of solving | Top down | Bottom up |

| 2 | Explicit specification of ordering | Not required | Required |

| 3 | Implementation | Recursive | Iterative |

| 4 | Solving style | Solves only subproblems needed | Solves all subproblems |

Solving a dp problem is a little methodological in nature. Following are the critical steps while approaching a dp problem:

Note:1. If you're using python, using PyPy to submit on atcoder. Classic python gives tle even to the codes that have otherwise acceptable time complexities.

2. The article will have detailed transition diagrams in the beginning and will become more abstract as we go ahead, eventually not appearing. Reader is encouraged to draw their own transition diagrams. The following link is an excellent resource for dp practice, as it contains a curated list of the most standard dp problems and I highly recommend checking it out.Link to atcoder dp contest

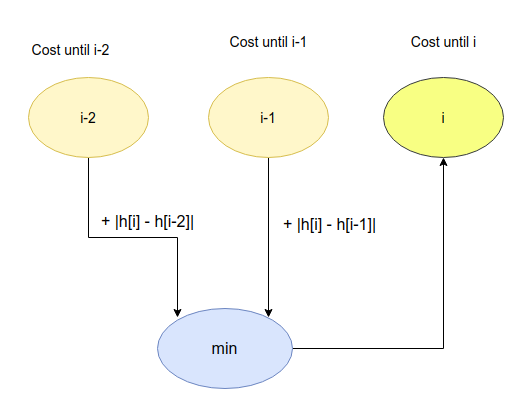

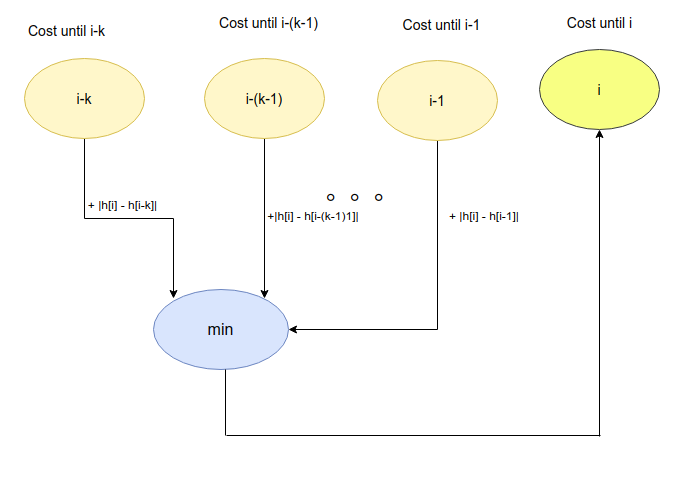

Frog B

Frog B is extremely similar to frog A, except for the fact that we will be dealing with upto n transitions.

In other words, dp[i] = min(dp[i], dp[i-1] + |h[i]-h[i-1]|, dp[i-2] + |h[i] - h[i-2]|, ... , dp[i-k] + |h[i] - h[i-k]|)

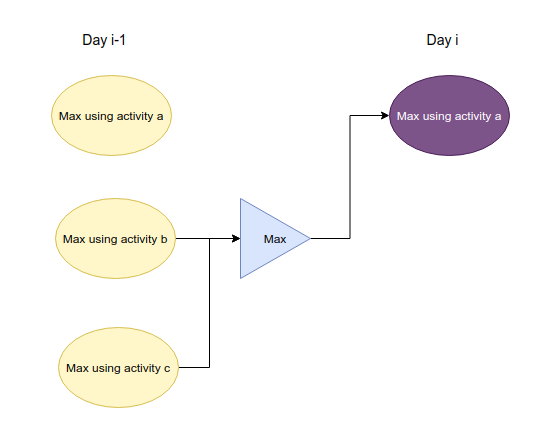

The problem asks you to find the max 'fun' given that no same activity can be done in 2 consecutive days.

Intuition: If activity a is done on day i, then activity that can be done on day i-1 can either be activity b or c.

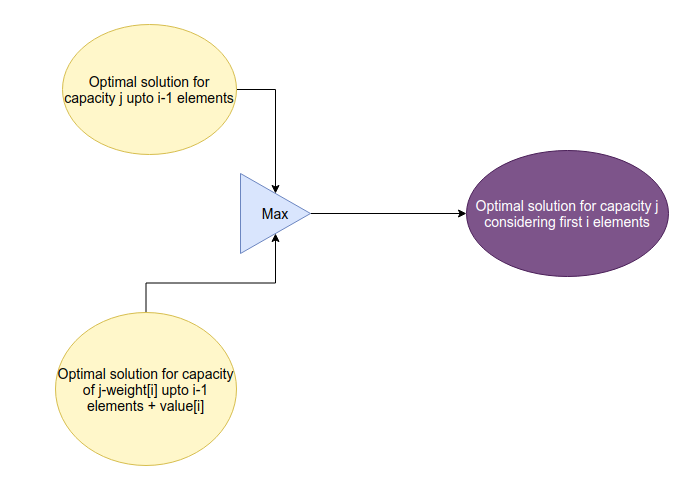

This problem is the classical 0/1 Knapsack problem. Given a set of n items each with weight w[i] and value v[i], and a sack which can hold atmost weight of W, find the maximum value you can hold using the sack.

Approach: There are 2 parameters that are crucial in determining the problem's outcome.

# i: current index, C: capacity

# call: f(N,W)

f(i,C,w[],v[]):

if i < 0:

return 0

if w[i] > C:

return f(i-1,C,w,v)

return max(f(i-1,C,w,v), f(i-1,C-w[i],w,v) + v[i])

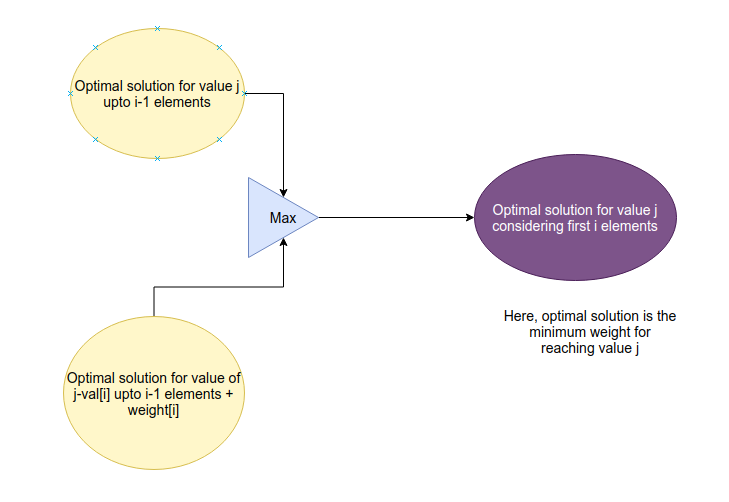

This is a variant to the knapsack, where the constraints on weights are larger ~(109). So, our previous solution, which uses weight as one the states will make the problem computationally infeasible. However, take note of the constraints of values. No value is greater than 105, and the maximum number of items is 100. In other words, the sum of all values cannot be larger than 107. Now the problem reduces to reframing the question, to use the 'value' state: (ranges from 0 to sum of all values).

We reframe the question as follows: Given a particular value, what is the minimum possible weight usable to get this value? (Return infinity if it is not possible to reach the value). Then, we can iterate over the range of reachable values(0 to sum(val)) and get the largest value for which the answer(i.e the min weight) is <= knapsack's cap.

Let us look at the states,base case, and transitions for this problem.

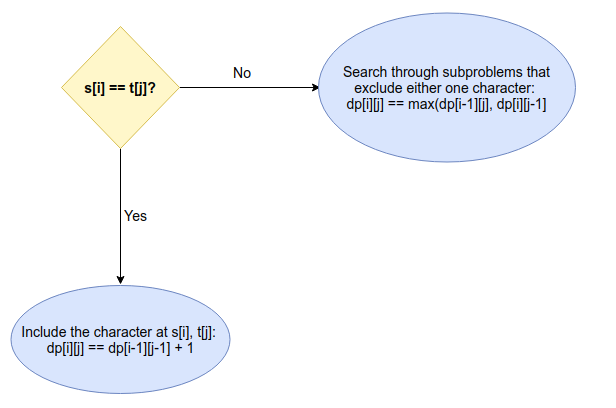

Classic LCS: Given strings s and t, find out (any one) longest common subsequence. Our strategy will be to first build the table for the finding optimal length of lcs, and then using the table to pick the lcs.

if s[i-1] == t[j-1]: #0 based indexing, hence then -1

dp[i][j] = dp[i-1][j-1] + 1

else:

dp[i][j] = max(dp[i-1][j],dp[i][j-1])

Given a Directed Acyclic Graph, find the length of the longest path.

This problem requires the second style of dynammic programming, memoization. We write a recursive code and memoize it using a single state dp array, which remembers the solutions to the subproblems, so that they are not recomputed. The main idea is to use dfs on all vertices seperately, and return the max length of the path which starts from that vertex.

Note: One vertex might be involved in multiple paths, therefore it is not possible to arrive at a correct answer by just visiting the vertex once.Given a grid with connectable rooms and blocked rooms, find the number of ways to reach the destination h,w from start 1,1.

if grid[i][j] is a connecting room:

dp[i][j] = (dp[i][j-1] + dp[i-1][j])

Given N coins and the probabilities of getting heads for each coins respectively, find the probability of getting more heads than tails. This is a nice introduction to probability dp problems. It's not really that different from a classic dp problem.

This is difficult problem. The problem states that you are given n plates, where each plate is one of the 4 types of plates of sushis, plates with 3,2,1 and 0 sushis. You have to calculate the expected number of operations till all the plates are empty.

Observation 1: The plates belonging to the same category are identical. This means that order does not matter. We only need to concerned with the counts of 3-plates,2-plates,1-plates, and 0-plates.

Observation 2: sum of all types of plates = n. Therefore we can rewrite 0-plates as: n - (3plates+ 2plates + 1plates), and avoid storing 0 plates as a seperate state.

In this article we talked about what dp is, the necessary theory behind it, followed by solving standard dp problems. At the end, I'd like to once again, emphasize the way of thinking in dp problems. To break it down, the flow goes like:

This requires some practice to get used to, but after solving a few problems, the whole process starts seeming more natural.

Well then, until next time!